MRCpy.DWGCS¶

- class MRCpy.DWGCS(loss='0-1', deterministic=True, random_state=None, fit_intercept=False, D=4, sigma_=None, B=1000, solver='adam', alpha=0.01, stepsize='decay', mini_batch_size=None, max_iters=None, phi='linear', **phi_kwargs)[source]¶

Double-Weighting General Covariate Shift

The class DWGCS implements the method Double-Weighting for General Covariate Shift (DWGCS) proposed in [1]. It is designed for supervised classification under covariate shift.

DW-GCS provides adaptatition to covariate shift when a set of training samples and a set of unlabeled samples from the testing distribution are available at learning, without any prior knowledge about the supports of the training and testing distribution.

It implements 0-1 and log loss, and it can be used with multiple feature mappings.

See also

For more information about DWGCS, one can refer to the following paper:

[1] `Segovia-Martín, J. I., Mazuelas, S., & Liu, A. (2023). Double-Weighting for Covariate Shift Adaptation. International Conference on Machine Learning (ICML) 2023.

@InProceedings{SegMazLiu:23, title = {Double-Weighting for Covariate Shift Adaptation}, author = {Segovia-Mart{‘i}n, Jos{‘e} I.

and Mazuelas, Santiago and Liu, Anqi},

- booktitle = {Proceedings of the 40th

International Conference on Machine Learning},

pages = {30439–30457}, year = {2023}, volume = {202}, series = {Proceedings of Machine Learning Research}, month = {23–29 Jul}, publisher = {PMLR}, }

- loss

str{‘0-1’, ‘log’}, default = ‘0-1’ Type of loss function to use for the risk minimization. 0-1 loss quantifies the probability of classification error at a certain example for a certain rule. Log-loss quantifies the minus log-likelihood at a certain example for a certain rule.

- deterministic

bool, default =True Whether the prediction of the labels should be done in a deterministic way (given a fixed

random_statein the case of using random Fourier or random ReLU features).- random_state

int, RandomState instance, default =None Random seed used when ‘fourier’ and ‘relu’ options for feature mappings are used to produce the random weights.

- fit_intercept

bool, default =False Whether to calculate the intercept for MRCs If set to false, no intercept will be used in calculations (i.e. data is expected to be already centered).

- D

int, default = 4 Hyperparameter that balances the trade-off between error in expectation estimates and confidence of the classification.

- B

int, default = 1000 Parameter that bound the maximum value of the weights beta associated to the training samples.

- solver{‘cvx’, ‘grad’, ‘adam’}, default = ’adam’

Method to use in solving the optimization problem. Default is ‘cvx’. To choose a solver, you might want to consider the following aspects:

- ’cvx’

Solves the optimization problem using the CVXPY library. Obtains an accurate solution while requiring more time than the other methods. Note that the library uses the GUROBI solver in CVXpy for which one might need to request for a license. A free license can be requested here

- ’grad’

Solves the optimization using stochastic gradient descent. The parameters

max_iters,stepsizeandmini_batch_sizedetermine the number of iterations, the learning rate and the batch size for gradient computation respectively. Note that the implementation uses nesterov’s gradient descent in case of ReLU and threshold features, and the above parameters do no affect the optimization in this case.- ’adam’

Solves the optimization using stochastic gradient descent with adam (adam optimizer). The parameters

max_iters,alphaandmini_batch_sizedetermine the number of iterations, the learning rate and the batch size for gradient computation respectively. Note that the implementation uses nesterov’s gradient descent in case of ReLU and threshold features, and the above parameters do no affect the optimization in this case.

- alpha

float, default =0.001 Learning rate for ’adam’ solver.

- mini_batch_size

int, default =1or32 The size of the batch to be used for computing the gradient in case of stochastic gradient descent and adam optimizer. In case of stochastic gradient descent, the default is 1, and in case of adam optimizer, the default is 32.

- max_iters

int, default =100000or5000or2000 The maximum number of iterations to use in case of ’grad’ or ’adam’ solver. The default value is 100000 for ’grad’ solver and 5000 for ’adam’ solver and 2000 for nesterov’s gradient descent.

- phi

strorBasePhiinstance, default = ‘linear’ Type of feature mapping function to use for mapping the input data. The currenlty available feature mapping methods are ‘fourier’, ‘relu’, and ‘linear’. The users can also implement their own feature mapping object (should be a

BasePhiinstance) and pass it to this argument. Note that when using ‘fourier’ feature mapping, training and testing instances are expected to be normalized. To implement a feature mapping, please go through the Feature Mappings section.- ‘linear’

It uses the identity feature map referred to as Linear feature map. See class

BasePhi.- ‘fourier’

It uses Random Fourier Feature map. See class

RandomFourierPhi.- ‘relu’

It uses Rectified Linear Unit (ReLU) features. See class

RandomReLUPhi.

- **phi_kwargsAdditional parameters for feature mappings.

Groups the multiple optional parameters for the corresponding feature mappings(

phi).For example in case of fourier features, the number of features is given by

n_componentsparameter which can be passed as argument -DWGCS(loss='log', phi='fourier', n_components=300)The list of arguments for each feature mappings class can be found in the corresponding documentation.

Methods

DWKMM(xTr, xTe)Obtain training and testing weights.

compute_lambda(xTe, tau_, n_classes)Compute deviation in the mean estimate tau using the given testing instances.

compute_phi(X)Compute the feature mapping corresponding to instances given for learning the classifiers and prediction.

compute_tau(xTr, yTr)Compute mean estimate tau using the given training instances.

error(X, Y)Return the mean error obtained for the given test data and labels.

fit(xTr, yTr[, xTe])Fit the MRC model.

Get metadata routing of this object.

get_params([deep])Get parameters for this estimator.

Returns the upper bound on the expected loss for the fitted classifier.

minimax_risk(X, tau_, lambda_, n_classes)Solves the marginally constrained minimax risk optimization problem for different types of loss (0-1 and log loss).

predict(X)Predicts classes for new instances using a fitted model.

Computes conditional probabilities corresponding to each class for the given unlabeled instances.

psi(phi_mu, phi)Function to compute the psi function in the objective using the given solution mu and the feature mapping corresponding to a single instance.

score(X, y[, sample_weight])Return the mean accuracy on the given test data and labels.

set_fit_request(*[, xTe, xTr, yTr])Request metadata passed to the

fitmethod.set_params(**params)Set the parameters of this estimator.

set_score_request(*[, sample_weight])Request metadata passed to the

scoremethod.- DWKMM(xTr, xTe)[source]¶

Obtain training and testing weights.

Computes the weights associated to the training and testing samples solving the DW-KMM problem.

- Parameters

- xTr

array-like of shape (n_samples,n_dimensions) Training instances used in

Computing the training weights beta and testing weights alpha.

n_samplesis the number of training samples andn_dimensionsis the number of features.- xTr

array-like of shape (n_samples,n_dimensions) Testing instances used in

Computing the training weights beta and testing weights alpha.

n_samplesis the number of training samples andn_dimensionsis the number of features.

- xTr

- Returns

- self :

Weights self.beta_ and self.alpha_

- __init__(loss='0-1', deterministic=True, random_state=None, fit_intercept=False, D=4, sigma_=None, B=1000, solver='adam', alpha=0.01, stepsize='decay', mini_batch_size=None, max_iters=None, phi='linear', **phi_kwargs)[source]¶

Initialize self. See help(type(self)) for accurate signature.

- compute_lambda(xTe, tau_, n_classes)[source]¶

Compute deviation in the mean estimate tau using the given testing instances.

- Parameters

- xTe

array-like of shape (n_samples,n_dimensions) Training instances used for solving the minimax risk optimization problem.

- tau_

array-like of shape (n_features*n_classes) The mean estimates for the expectations of feature mappings.

- n_classes

int Number of labels in the dataset.

- xTe

- Returns

- lambda_ :

Confidence vector

- compute_phi(X)[source]¶

Compute the feature mapping corresponding to instances given for learning the classifiers and prediction.

- compute_tau(xTr, yTr)[source]¶

Compute mean estimate tau using the given training instances.

- Parameters

- Returns

- tau_ :

Mean expectation estimate

- error(X, Y)¶

Return the mean error obtained for the given test data and labels.

- Parameters

- Returns

- errorfloat

Mean error of the learned MRC classifier

- fit(xTr, yTr, xTe=None)[source]¶

Fit the MRC model.

Computes the parameters required for the minimax risk optimization and then calls the

minimax_riskfunction to solve the optimization.- Parameters

- xTr

array-like of shape (n_samples,n_dimensions) Training instances used in

Calculating the expectation estimates that constrain the uncertainty set for the minimax risk classification

Solving the minimax risk optimization problem.

n_samplesis the number of training samples andn_dimensionsis the number of features.- yTr

array-like of shape (n_samples, 1), default =None Labels corresponding to the training instances used only to compute the expectation estimates.

- xTearray-like of shape (

n_samples2,n_dimensions), default = None These instances will be used in the minimax risk optimization. These extra instances are generally a smaller set and give an advantage in training time.

- xTr

- Returns

- self :

Fitted estimator

- get_metadata_routing()¶

Get metadata routing of this object.

Please check User Guide on how the routing mechanism works.

- Returns

- routingMetadataRequest

A

MetadataRequestencapsulating routing information.

- get_params(deep=True)¶

Get parameters for this estimator.

- Parameters

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns

- paramsdict

Parameter names mapped to their values.

- get_upper_bound()¶

Returns the upper bound on the expected loss for the fitted classifier.

- Returns

- upper_bound

float Upper bound of the expected loss for the fitted classifier.

- upper_bound

- minimax_risk(X, tau_, lambda_, n_classes)¶

Solves the marginally constrained minimax risk optimization problem for different types of loss (0-1 and log loss). When use_cvx=False, it uses SGD optimization for linear and random fourier feature mappings and nesterov subgradient approach for the rest.

- Parameters

- X

array-like of shape (n_samples,n_dimensions) Training instances used for solving the minimax risk optimization problem.

- tau_

array-like of shape (n_features*n_classes) The mean estimates for the expectations of feature mappings.

- lambda_

array-like of shape (n_features*n_classes) The variance in the mean estimates for the expectations of the feature mappings.

- n_classes

int Number of labels in the dataset.

- X

- Returns

- self :

Fitted estimator

- predict(X)¶

Predicts classes for new instances using a fitted model.

Returns the predicted classes for the given instances in

Xusing the probabilities given by the functionpredict_proba.

- predict_proba(X)¶

Computes conditional probabilities corresponding to each class for the given unlabeled instances.

- psi(phi_mu, phi)¶

Function to compute the psi function in the objective using the given solution mu and the feature mapping corresponding to a single instance.

- score(X, y, sample_weight=None)¶

Return the mean accuracy on the given test data and labels.

In multi-label classification, this is the subset accuracy which is a harsh metric since you require for each sample that each label set be correctly predicted.

- set_fit_request(*, xTe: Union[bool, None, str] = '$UNCHANGED$', xTr: Union[bool, None, str] = '$UNCHANGED$', yTr: Union[bool, None, str] = '$UNCHANGED$') → MRCpy.dwgcs.DWGCS¶

Request metadata passed to the

fitmethod.Note that this method is only relevant if

enable_metadata_routing=True(seesklearn.set_config()). Please see User Guide on how the routing mechanism works.The options for each parameter are:

True: metadata is requested, and passed tofitif provided. The request is ignored if metadata is not provided.False: metadata is not requested and the meta-estimator will not pass it tofit.None: metadata is not requested, and the meta-estimator will raise an error if the user provides it.str: metadata should be passed to the meta-estimator with this given alias instead of the original name.

The default (

sklearn.utils.metadata_routing.UNCHANGED) retains the existing request. This allows you to change the request for some parameters and not others.New in version 1.3.

Note

This method is only relevant if this estimator is used as a sub-estimator of a meta-estimator, e.g. used inside a

Pipeline. Otherwise it has no effect.- Parameters

- xTestr, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED

Metadata routing for

xTeparameter infit.- xTrstr, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED

Metadata routing for

xTrparameter infit.- yTrstr, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED

Metadata routing for

yTrparameter infit.

- Returns

- selfobject

The updated object.

- set_params(**params)¶

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters

- **paramsdict

Estimator parameters.

- Returns

- selfestimator instance

Estimator instance.

- set_score_request(*, sample_weight: Union[bool, None, str] = '$UNCHANGED$') → MRCpy.dwgcs.DWGCS¶

Request metadata passed to the

scoremethod.Note that this method is only relevant if

enable_metadata_routing=True(seesklearn.set_config()). Please see User Guide on how the routing mechanism works.The options for each parameter are:

True: metadata is requested, and passed toscoreif provided. The request is ignored if metadata is not provided.False: metadata is not requested and the meta-estimator will not pass it toscore.None: metadata is not requested, and the meta-estimator will raise an error if the user provides it.str: metadata should be passed to the meta-estimator with this given alias instead of the original name.

The default (

sklearn.utils.metadata_routing.UNCHANGED) retains the existing request. This allows you to change the request for some parameters and not others.New in version 1.3.

Note

This method is only relevant if this estimator is used as a sub-estimator of a meta-estimator, e.g. used inside a

Pipeline. Otherwise it has no effect.- Parameters

- sample_weightstr, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED

Metadata routing for

sample_weightparameter inscore.

- Returns

- selfobject

The updated object.

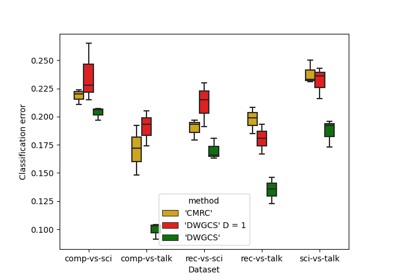

Examples using MRCpy.DWGCS¶

Example: Use of DWGCS (Double-Weighting General Covariate Shift) for Covariate Shift Adaptation